How to resolve disk space shortage

This topic helps you resolve disk space issues that can occur when something interferes with replication. See Solve replication problems for possible causes.

How to conserve disk space on the target

SharePlex captures and processes data much faster than it posts it with SQL statements on the target system, so the target is where most disk problems can occur, assuming the network is operational and data is being sent from the source. If you think the post queue may exceed its disk space, there may be enough free space on the source system to store the data temporarily until the Post queue clears out.

- Stop the Import process.

- Let the data accumulate on the source system until Post processes enough messages to clear the post queue.

- Start Import.

- Continue to stop and start Import until the amount of data accumulating in the post queue levels out.

When you implement this method, monitor the replication services and disk usage on the source system. On Unix and Linux systems, you can use the sp_ps script to monitor processes and the sp_qstatmon monitoring script to monitor the queues. On Windows systems, you can use the Sp_Nt_Mon utility to monitor those components. For more information, see Monitor SharePlex.

How to restore disk space

If a queue disk runs out of disk space, you may see messages similar to this in the Event Log:

11/22/07 14:14 System call error: No space left on device bu_wt.write [sp_mport(que)/1937472]

11/22/07 14:14 System call error: No space left on device bu_rls.bu_wt [sp_mport(que)/1937472]

11/22/07 14:14 Error: que_BUFWRTERR: Error writing buffer to file que_writecommit(irvspxuz+P+o.a920a64z-o.a102a64z) [sp_mport(rim)/1937472] 11/22/07 14:14 Error: sp_mport: rim_writecommit failed 30 - exiting [sp_mport/ 1937472]

11/22/07 14:14 Process exited sp_mport (from irvspxuz.domain.com queue irvspxuz) [pid = 1937472] - exit(1)

If a queue disk is almost out of free space, you might be able to add disk space without the need to resynchronize the data.

To restore disk space

- Stop SharePlex on the affected system.

- Add more disk space.

- Start SharePlex.

-

View the Event Log and look for the messages "queue recovery started" and "queue recovery complete."

- If both messages are there, SharePlex resumes processing where it stopped and the recovery succeeded. If your applications generate high volumes of transactions, there may be numerous backlogged messages in the queues. Depending on the nature of the transactions, how well the target database and the Post process are tuned, and your tolerance for latency, it might be more practical to resynchronize the data instead of waiting for replication to regain parity with transactional activity.

- If one or more queues is corrupted, the Event Log records a message like this: Bad header magic... or peekahead failure. Or, you will see the message queue recovery started, but you will not see the queue recovery complete message that signifies successful queue recovery. In this case, you must restore replication an initial state.

To restore replication to an initial state

- Run db_cleansp to restore the variable-data directory and SharePlex tables. It must be run on all systems in the affected replicationconfiguration. See the utilities documentation in the SharePlex Reference Guide.

- Synchronize the data using your method of choice, then reactivate the configuration. For more information, see Start replication on your production systems.

- You can prevent this problem from occurring again by using the SharePlex monitoring utilities to start unattended monitoring of key replication events, including queue volume alerts. For more information, see Monitor SharePlex.

How to find the ORACLE_SID and ORACLE_HOME

When setting up SharePlex to work with an Oracle database, you provide the ORACLE_SID and then SharePlex gets the ORACLE_HOME from the Windows Registry or theoratab file on Unix/Linux. Both values are stored in the SharePlex environment. SharePlex uses the Oracle libraries that are in the location specified with ORACLE_HOME.

To determine the ORACLE_SID and ORACLE_HOME being used by SharePlex

Issue the orainfo command in sp_ctrl.

sp_ctrl (mysysl11:2101)> orainfo

Oracle instance #1:

Oracle SID ora12

Oracle HOME /oracle/products/12

Oracle Version 12

Oracle instance #2:

Oracle SID ora12

Oracle HOME /oracle/products/12

Oracle Version 12

To determine the default ORACLE_SID and ORACLE_HOME on Windows

View the Oracle entry in the Registry at \HKEY_LOCAL_MACHINE\SOFTWARE\ORACLE.

To determine the default ORACLE_SID and ORACLE_HOME on UNIX and Linux

On most Unix and Linux systems the oratab file is under /etc/oratab. On Oracle Solaris systems, it is under /var/opt/oracle, but sometimes there is an oratab file in the /etc directory as well.

The entry in the file looks like the following example:

qa12:/qa/oracle/ora12/app/oracle/product/12.0

In the example, qa12 is the ORACLE_SID and /qa/oracle/ora12/app/oracle/product/12.0 is the ORACLE_HOME.

Repair out-of-sync data

This chapter contains an overview of the SharePlex Compare and Repair feature. SharePlex provides this feature as built-in support for Oracle tables to help you maintain data that is synchronized between the source and target systems.

Contents

Overview of Compare and Repair

In addition to regularly monitoring the health and performance of replication, it is good practice to compare the source and target data on a regular schedule to ensure that all of the data is still synchronized. Post detects out-of-sync conditions for the rows that it is processing, but there can be hidden out-of-sync conditions. Examples of these are DML applied on the target or an incomplete backup restore. These conditions can go undetected until Post applies an operation that affects the out-of-sync row. The SharePlex Compare and Repair feature enables you to detect hidden out-of-sync conditions and then repair them.

Note: To understand how hidden out-of-sync conditions can occur, see Understand the concept of synchronization.

SharePlex provides the following commands for comparing and repairing out-of-sync data:

- compare: Compares an individual source table to its target table or compares a wildcarded set of tables in the same schema.

- compare using: Takes input from a file to compare some or all of the tables in the active replication configuration.

- repair: Repairs an individual target table or a wildcarded set of tables in the same schema.

- repair using: Takes input from a file to repair some or all of the tables in the active replication configuration.

Supported sources

Oracle

Supported targets

Oracle

Overview of the server and client processes

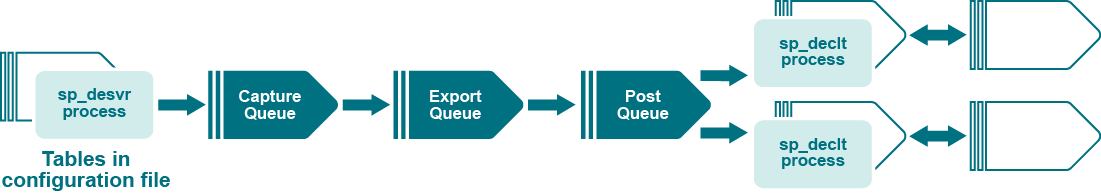

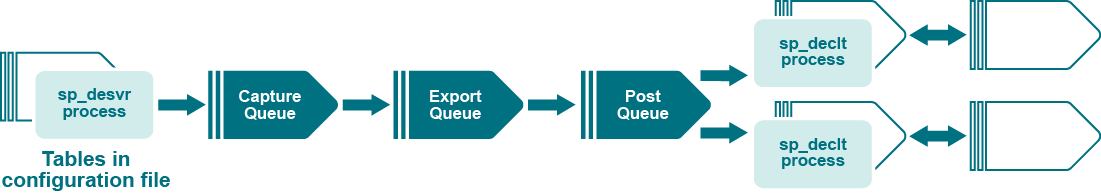

The compare and repair commands are always issued on the source system. The command spawns a server process on the source system and then sends a message through the SharePlex queues to spawn a client process on the target system.

The server and client processes then begin communication with each other. Depending on the syntax options included in the command, the processes may be multithreaded on the target. The two processes compare the source and target tables and then write the results to a log file.

How locks are managed

During a comparison, SharePlex obtains a brief exclusive lock on the source table to get read consistency for its row selection. On the target system, SharePlex obtains an exclusive lock on the target table and retains the lock for the duration of the comparison of that table. This prevents the table from being modified while SharePlex is processing it.

After locking the tables, the rows are read and sorted in identical fashion on both source and target. Next, a batch of rows is read and a check sum is performed. If the check sums match, another batch of rows is processed the same way. If any check sums do not match, the processes determine which rows are out-of-synchronization and then they create the SQL statements to repair them. If a repair command is issued, SharePlex repairs the rows.