Benchmark Factory Components

Benchmark Factory consists of the following components:

Benchmark Factory Console (Desktop Application)

The Benchmark Factory console is used to create, run, and review Jobs (tests). You can also use the console to deploy agents, access the Repository, access reports, and create connections.

The Repository

All test results are collected and stored in the repository for data analysis and reporting. Benchmark Factory collects a vast amount of statistics, including overall server throughput (measured in transactions per second, bytes transferred, etc.) and detailed transaction statistics by individual workstation producing a load. You use these statistics to measure, analyze, and predict the capacity of a system.

The test results stored in the repository are displayed when you view the Results tab for a selected job or when you use the Run Reports component (Tools | Run Reports).

Agents

Agents simulate virtual users and send transactions to the system-under-test (database).

Run Reports

Benchmark Factory Run Reports is a separate component used to view the detailed test results in a report format. You can open Run Reports from the Benchmark Factory console (Tools | Run Reports) or from the Start menu (Benchmark Factory | Run Reports).

Integration with Other Toad Products

Benchmark Factory integrates with other Toad products to allow IT departments to quickly measure the capacity and performance of their systems to ensure that users will experience fast response times. For instance, Benchmark Factory can run multiple workloads in conjunction with Spotlight™ products. This provides the ability to detect and diagnose issues, allowing users to resolve bottlenecks, slow performance, and application flaws before an application is entered into production.

Understanding Benchmark Testing

Review the following for a better understanding of how Benchmark Factory performs benchmark testing on your database (system-under-test).

Agents, Virtual Users, and Automated Testing

When you load test with Benchmark Factory, you are performing automated testing. Automated testing is the process of using software to test computer hardware or software implying that the software is used instead of actual users. The software acts as a "virtual" user. For example, say you would like to test your database with two hundred virtual users over a given period of time. Benchmark Factory lets you select two hundred virtual users and the length of time you wish to perform the test.

Benchmark Factory uses Agents to deploy the virtual users. An Agent is a software routine that waits in the background and performs an action when a specified event occurs. One Agent can simulate thousands of virtual users (limited by hardware and workload characteristics) at a time. Each virtual user has their own connection to the system under test.

Understanding Benchmarks

A benchmark is a performance test of hardware and/or software on a system-under-test. Benchmark Factory provides the option of using industry standard benchmarks during the load testing process. Benchmarks measure system peak performance when performing typical operations.

Benchmark Factory comes equipped with the following industry standard benchmarks:

- AS3AP Benchmark

- Scalable Hardware Benchmark

- TPC-B Benchmark

- TPC-C Benchmark

- TPC-D Benchmark

- TPC-E Benchmark

- TPC-H Benchmark

Understanding Benchmark Factory Terminology

The following provides a list of terminology required to understand the Benchmark Factory load testing process.

- The Jobs View pane is the user's workspace that allows you to create and save jobs.

- Saved jobs are listed in the Jobs View pane. See Jobs View for more information.

- The New Job Wizard is the starting point for creating Jobs. See Quickstart: Create a New Job for more information.

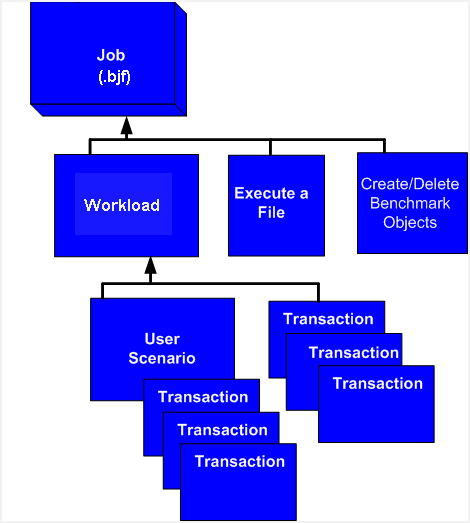

- A job is comprised of the following:

- Job settings

- A database connection

- A workload (comprised of tests)

- Create/Delete Benchmark Objects Test

- An Execute test

- A workload is an assembled test comprised of user scenarios and/or transactions. These tests can be run with multiple virtual users. A workload or test can be of one of the following types:

- Mixed Workload: A Mixed Workload test runs for a specified time at each predetermined user load level. Each user will run a transaction mix based upon the weights defined on the transactions tab. For example, if a test has two transactions, A and B, with A having a weight of one and B having a weight of four, on average B will run four times for every time A is run once. The run order will be randomly generated for each user so they are not all running the same transaction simultaneously. That run order is used for that user each time the test is performed to ensure reproducible results.

- Replay Test: A Replay Test runs multiple transactions with each one running independently on a specified number of users. The test will run until the defined number of executions for each transaction or a specified time limit is reached.

- SQL Scalability Test: A SQL Scalability test executes each transaction individually for each userload and timing or execution period. For example, a test could have two transactions, A and B, and two userloads of 10 and 20, with an iteration length of one minute. Transaction A would execute continually for one minute at userload 10, then B would do the same. Next A will run at userload 20, followed again by test B, for a total time of 4 minutes.

- User Scenario: A series of one or more transactions to be executed in order, to make a single transaction. A User Scenario is normally associated with user behavior simulated against the system-under-test. These are the components used to build a workload.

- Transaction: A single unit of work in the testing process, such as retrieving a Web page, executing a SQL statement, writing a file, or sending an email.

- Execute Test: Allows you to execute a file during the running of a job.

- Create/Delete Benchmark Objects Test configures a system-under-test for industry standard benchmark tests to measure system performance. Each standard benchmark has been developed with specific system configuration requirements, that include tables, indexes, data, etc.

How Benchmark Factory Works

The following steps provide an overview of how Benchmark Factory components interact during the load testing process.

- The Benchmark Factory Console implements the workload testing process and controls one or more distributed agent machines. Each agent machine can simulate thousands of users. Each simulated user executes transactions and records statistics.

- Benchmark Factory About Agents machines simulate virtual users. The Agents send transactions to the system-under-test. The Agents record statistics that includes how much data the resulting transaction contained and how long it took to get the results. At the end of an iteration, each agent machine reports its findings back to Benchmark Factory.

- A server (system-under-test) is the database Benchmark Factory connects to. Benchmark Factory is server neutral and network protocol independent. Benchmark Factory uses vendor client libraries of the system-under-test. Any system that the client software can support, Benchmark Factory can support. Its only requirement is that Agent machines must be able to connect to a server through an appropriate manner and it supports the test system.

- The Repository Manager stores all testing data.

- Results are viewed from Run Reports.