For more information on this topic, please see:

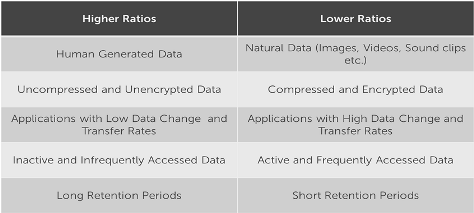

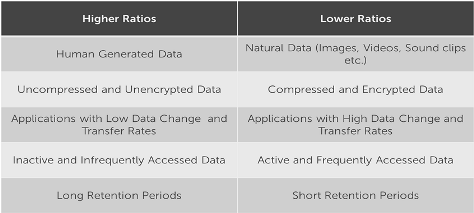

Optimization Considerations:

Many factors impact data deduplication. The following table provides factors to consider that may yield higher/lower compression ratios based on the type of data:

Sizing your Deduplication Cache:

To ensure achieving the best Deduplication ratio which will optimize the repository storage and disk I/O it is recommended to size the Deduplication Cache properly when you first deploy the AppAssure Core Server. The Deduplication Cache (Dedupe Cache) is a table where all hashes are stored during deduplicating new incoming data, if you decide to resize the Dedupe Cache after taking backups the benefits of the added size to the Dedupe Cache will only be useful for new incoming data by making more space to store new hashes, so it is recommended to set a sufficient size during the deployment phase.

The default Dedupe Cache size of 1.5 GB is enough to cover 800 GB worth of Deduplicated data (unique data). Assuming that the total deduplication/compression ratio is 50%, the 1.5 GB is estimated to be sufficient for 1.5 TB of raw protected data. If your total protected data is more than 1.5 TB use the following calculations to determine the amount of Dedupe Cache size requirements:

- Dedupe Cache size in (X) = Y / 1000, where Y is the total protected data in GB. X is the Dedupe Cache size in GB and is limited to 50% of the installed physical memory (RAM) on the AppAssure Core Server

- Min Core Server RAM size = 2 * X

- Min Dedupe Cache Storage Space (for storing both the Primary and Secondary Dedupe Cache files) = 2 * X

Example:

NOTES:

- The above is an approximate calculation based on expected Deduplication/Compression ratio of 50%, if the type of protected data is expected to yield a lower ratio please adjusts the Dedupe Cache size accordingly.

- The Secondary Dedupe Cache does not require additional RAM so the above calculation for memory requirement does not need to be doubled .

- The Dedupe cache is shared by all repositories on a Core Server, however; deduplication does not happen across repositories, so if the same block appears once in two repositories, it will not be deduped.

For more details regarding changing the Dedupe Cache size and settings please refer to Configuring Deduplication Cache Settings in the User Guide.

Best Practices:

- Use the formulas above to sizing the Dedupe Cache properly during the AppAssure/RR Core deployment before starting to transfer the first backups. That will give an idea about the resources requirement and if adding more memory or storage is required at an earlier stage. Also, configuring a sufficient Dedupe Cache size at that time will result in higher deduplication ratio and less used space on the Repository in the future.

- Start taking backups for agents with similar OS to optimize the Dedupe Cache. For example, if you have 10 agents with Windows Server 2012 R2 and 5 others with Windows XP SP3, let the backups complete for one of those groups before starting the backups for the other. Please note that this protection order is only relevant in the case where the dedupe cache size is insufficient for the amount of protected data.

- Protect Server with similar data in batches. For example, if you have a 5-node Exchange DAG, it is recommended to backup these agents together one after the other. Please note that this protection order is only relevant in the case where the dedupe cache size is insufficient for the amount of protected data.

- If you plan to enable Encryption on your agents, it is recommended to enable that before taking the first backups as encrypting backups at a later point will require a new Base Image backup and that would affect the deduplication ratio.

- The Dedupe Cache size will be loaded in the RAM every time the Core Service is started and flushed from the RAM to the disk when the service stopped so increasing the size would incur in added delay for starting/stopping the Core Service. In addition to that, the cache contents will be flushed to the disk on hourly bases, and after every time a Base Image completes transferring. During that time, all Repository I/O activities will be paused, thus it is highly recommended to store the Dedupe Cache files on a dedicated spindle or even better on a Solid State Drive (SSD).

- Avoid shrinking the dedupe cache once it has been expanded. Shrinking the dedupe cache results in the cache being deleted and recreated at the new size. This will reduce the cores deduplication capability and may result in more repository space being consumed than would have been used otherwise.

When possible, strive to keep the DC below 20GB in size. A Dedupe Cache of 20GB or below can

typically be written to disk very quickly.

In environments where the Dedupe Cache must be larger than 20GB, it is highly recommended to:

a. Move the Dedupe Cache to a dedicated disk separate from the OS and repository volumes.

b. Use faster, dedicated SSD storage for the primary and secondary Dedupe Cache. The use of

SSD drives will ensure that the DC can be written in a timely manner and prevent

disruption to normal Core activities.